Have you ever had that feeling when, at a crowded coffee shop, the Wi-Fi is so slow you can barely load your email, and you just know it’s because some genius is hogging all the bandwidth by torrenting the entire Marvel Cinematic Universe in 4K?

APIs suffer a similar indignity when they don’t have sensible rate limits in place. To help you remedy this appalling state of affairs, this article explores how API rate limiting works and why it’s vital for maintaining system stability and security.

Table of contents:

What is API rate limiting?

API rate limiting is a set of rules that caps how many requests a user or bot can make to an API in a given time window. This restriction is based on administrative rules or policies set by the API provider, and there are a bunch of ways to do it: token buckets, leaky buckets, sliding windows, and fixed windows, for example. But unless you’re really into computer science, the basic idea is this: you get a certain number of requests, and then you have to wait.

Without rate limits, you’d have unlimited API access, meaning you could send as many requests as you want. While that might sound fine for small enough projects, it’s a denial of service waiting to happen in most systems. Zapier, for example, uses API rate limiting to maintain fair usage and prevent one person’s runaway script from crashing the platform for everyone.

Why is API rate limiting important?

API rate limiting is important in the same way that locks, fire codes, and zipper merging are important. Without them, safety and order are compromised by unchecked behavior. Let’s dig into the main reasons you need rate limiting and how to think about it for your own API integrations.

Fair usage

API rate limiting stops one power-hungry user or a broken script from slamming your poor API with 50,000 requests a minute and slowing things down for everyone else. By setting clear boundaries, everyone gets a fair slice of the pie (or in this case, A”PI”).

Security

While not a silver bullet (silver being notably scarce in modern cybersecurity), rate limiting is a critical and often underestimated security tool. It directly prevents denial of service (DoS) and distributed denial of service (DDoS) campaigns, brute-force credential guessing, unauthorized data scraping, and other attempts that try to hammer your server with requests. While imposing ceilings on request rates doesn’t end malice, it does force it to slow down.

System stability

Infrastructure has feelings, chiefly exhaustion. By controlling resource allocation, API rate limiting prevents a sudden flood of activity from overwhelming your database, memory, or CPU. This is what stops your service from slowing to a crawl or crashing entirely during a traffic spike.

Cost management

As API usage scales, so do costs, and not always in a way that aligns with revenue or reason. Rate limits in API-driven systems function as important levers to control the growth of total spend, helping organizations manage expenses associated with increased demand on their infrastructure.

How to implement API rate limiting

There are several methods to implement API rate limiting, each suited to different needs and scenarios.

Algorithm-based

Algorithm-based rate limiting is a smart way to control API traffic. Instead of just blocking requests, it uses specific rules to keep usage at a healthy level. Here are a few of the most common methods:

-

Token bucket: Imagine a bucket filled with tokens. Every time an API request comes in, it has to “spend” one token to get through. The bucket gets refilled at a steady, fixed rate (say, 10 tokens per second). If a sudden burst of 100 requests comes in and the bucket is full, all 100 can get in instantly. But after that, new requests have to wait for new tokens to be added. This is perfect for handling things like a flash sale—you can let a lot of people in at once without ceding control over long-term usage.

-

Leaky bucket: Think of a bucket with a small hole in the bottom. All incoming requests are dumped into the top, but they can only “leak” out of the hole at one slow, constant rate. If a huge traffic spike hits, the bucket fills up (forming a queue), but your server on the other side only ever sees the steady, predictable drip. This is perfect for sanding down spiky traffic, like when 19 million of my closest friends and I flooded HBO to watch the series finale of “Game of Thrones.”

-

Sliding window: Sliding window algorithms track the exact timestamp for every single request, sliding the window forward as time progresses. When a new request comes in, the system just looks back and counts how many other requests have arrived in the last, say, 60 seconds. This method provides precise control over recent activity but can become resource-intensive. A social media API might use this approach to monitor and limit posts or comments from overly active users within a rolling time frame.

User-based

You can also deploy per-user rate limiting, which is exactly what it sounds like. Each user gets a specific quota (e.g., 1,000 API calls per day). To make this work, you need a reliable way to identify them, usually with an API key, username, or token.

You’ve probably noticed that most APIs have different tiers (free, premium, enterprise, super-deluxe galactic enterprise), and a user-based rate limit will vary based on their subscription level. For instance, a SaaS tool might give free users access to 500 API calls a day, while enterprise customers get 10,000. This all hinges on good authentication—the system has to know who is making the call to know which limit to apply.

IP-based

Instead of tracking users, per-IP rate limiting caps the number of requests that can be sent from a single IP address. It’s a blunt-force way to protect backend resources, maintain stability for legitimate users, and stop bad actors—like hackers, botnets, or rogue superintelligences—from pummeling your service.

For example, a weather data provider might limit any single IP to 60 requests a minute. That’s more than enough for a human, but it stops a data-scraping script in its tracks.

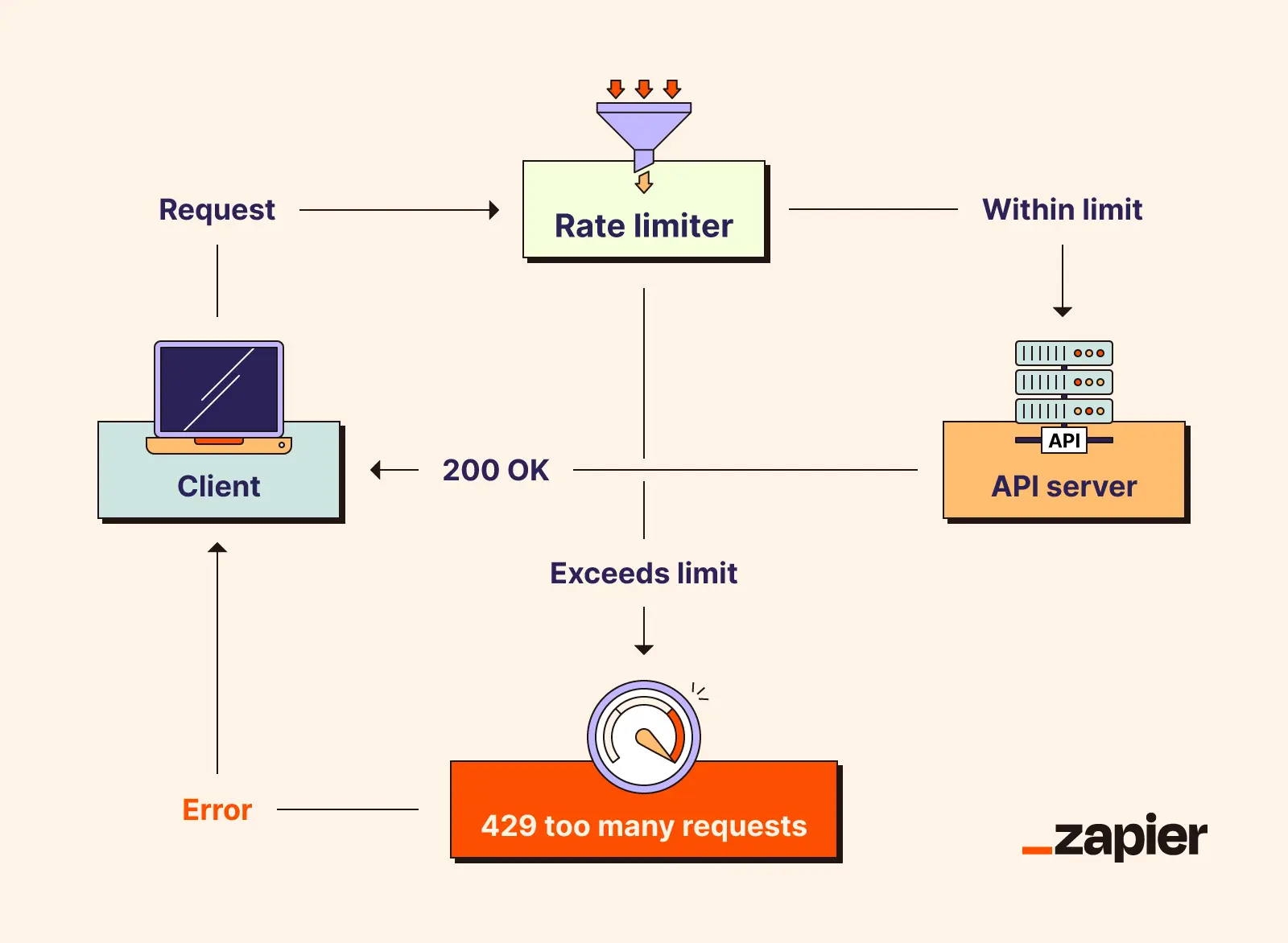

API throttling

API throttling refers to the action of enforcing a call limit. When a user exceeds their quota, the API throttles them by either slowing down their requests or rejecting them completely. (That’s when you’ll see a 429 “too many requests” error.) It’s an essential defense for keeping the server healthy for everyone, preventing one noisy user from ruining the party, whether they mean to or not.

Request queuing

Rather than simply rejecting excess API requests outright, request queuing puts them in a first-come, first-served queue to be processed as soon as there’s room. As with some of the other techniques, this strategy is perfect for handling sudden traffic spikes, as it lets the API process requests sequentially and at a manageable pace.

You’ve probably seen this yourself if you’ve ever tried to buy concert tickets the moment they go on sale. That virtual waiting room is a queue, and it’s far better than getting an error message. Unfortunately, you’ll have to pay Ticketmaster service fees either way.

Regardless of which approach you choose, remember that API rate limiting isn’t meant to be super complicated. Most modern API management platforms make setting and enforcing these limits relatively straightforward. Even if you’re using middleware such as NGINX, you can set rate limits with widely available modules.

API rate limiting best practices

To effectively address API rate limits while achieving the protections outlined earlier, consider these best practices:

-

Analyze usage patterns: Review run logs, error reports, and dashboard analytics to identify cycles of high usage and patterns leading to hitting rate limits.

-

Pinpoint high-traffic areas: Identify which apps or users generate the most traffic and adjust schedules or logic to spread the burden more evenly. (If you’re familiar with network engineering, this will sound like load balancing a server.)

-

Leverage existing tooling: Use your chosen platform’s built-in monitoring and real-time alerts to manage spikes and keep those all-important workflow orchestrations humming along nicely.

-

Set flood protection limits: If you’re using Zapier, you can edit Zap flood protection settings to cap how many times a Zap can trigger or run in a set window. Use the flood protection menu to set a max number of Zap runs per hour, or choose an interval that makes more sense for your application.

-

Use Zapier’s replay logic: When Zap runs are throttled or fail due to rate limits, Zapier lets you “replay” them easily once the limits reset. Activate automatic replay options for mission-critical Zaps so workflows can run even after temporary hiccups.

-

Consider webhooks over polling: In many cases, it’s possible to switch from polling triggers (checking on set intervals) to instant webhooks. Webhooks keep an application responsive while minimizing unnecessary API requests, which is especially valuable under rate constraints.

-

Document limits prominently: Try to note all app-specific rate limits in onboarding materials, API documentation, and technical references, while verifying that all team members are aware of limits for any apps involved. This will help spread the word about your rate limits, thereby (hopefully) avoiding unexpected errors and complaints.

-

Give advance warning: Set up real-time usage monitoring and notification steps to proactively alert when usage approaches a threshold (e.g., 75% of quota). Notify users through Slack, email, or dashboards as soon as limits are at risk—this gives them time to adjust scheduling or workflows before any disruption occurs.

Connect and orchestrate your apps with Zapier

Strong orchestration starts with smart API rate limiting. Spikes happen, but by setting clear request rules, they don’t become outages. Implementing the above strategies can ensure your systems remain stable, secure, and pleasantly boring.

Ready to streamline your workflows further? Check out Zapier’s IT automation solutions to see how easy it is to connect and orchestrate your apps seamlessly.

FAQ: Rate limiting in APIs

Use these frequently asked questions to design sane defaults, tier access thoughtfully, and avoid the kind of traffic spikes that turn a healthy API into a help desk ticket.

What is a good rate limit for an API?

The “right” rate limit for an API depends on several factors, including the purpose of the API, its expected usage patterns, and the resources available to handle requests. Public APIs, for example, often set lower limits—like 1,000-5,000 requests per day or 60 requests per minute—to ensure fair usage and prevent abuse. Enterprise or premium APIs, on the other hand, generally offer much higher limits—like tens of thousands of requests per minute—to accommodate heavier workloads from paying customers.

A good rule is to analyze typical usage patterns and set thresholds that balance accessibility with system stability. It’s also wise to tier limits based on user types.

What is “API rate limit exceeded”?

When you see an error message like “API rate limit exceeded,” it means the number of requests made to the API has surpassed the allowed limit within a specific period, which could result in your requests being temporarily blocked until the next quota reset.

What is a rate limiter?

A rate limiter is a mechanism that controls how many requests can be made to an API within a given time frame. Its main job is to protect the API server from being overwhelmed by too much traffic, whether intentional (like during a cyberattack) or unintentional (like a poorly configured app making excessive calls).

Rate limiters enforce rules set by the API provider, such as allowing only 60 requests per minute from a single IP address or capping daily usage at 1,000 requests per user. This helps maintain fairness, improve security, and ensure consistent performance for all users.

Related reading: