Remember that episode of “Seinfeld” where Jerry and the gang got hooked on non-fat yogurt? Everyone crammed their faces with the stuff, feeling virtuous because the sign promised it was healthy, until a lab test proved it was regular fat-laden yogurt the entire time.

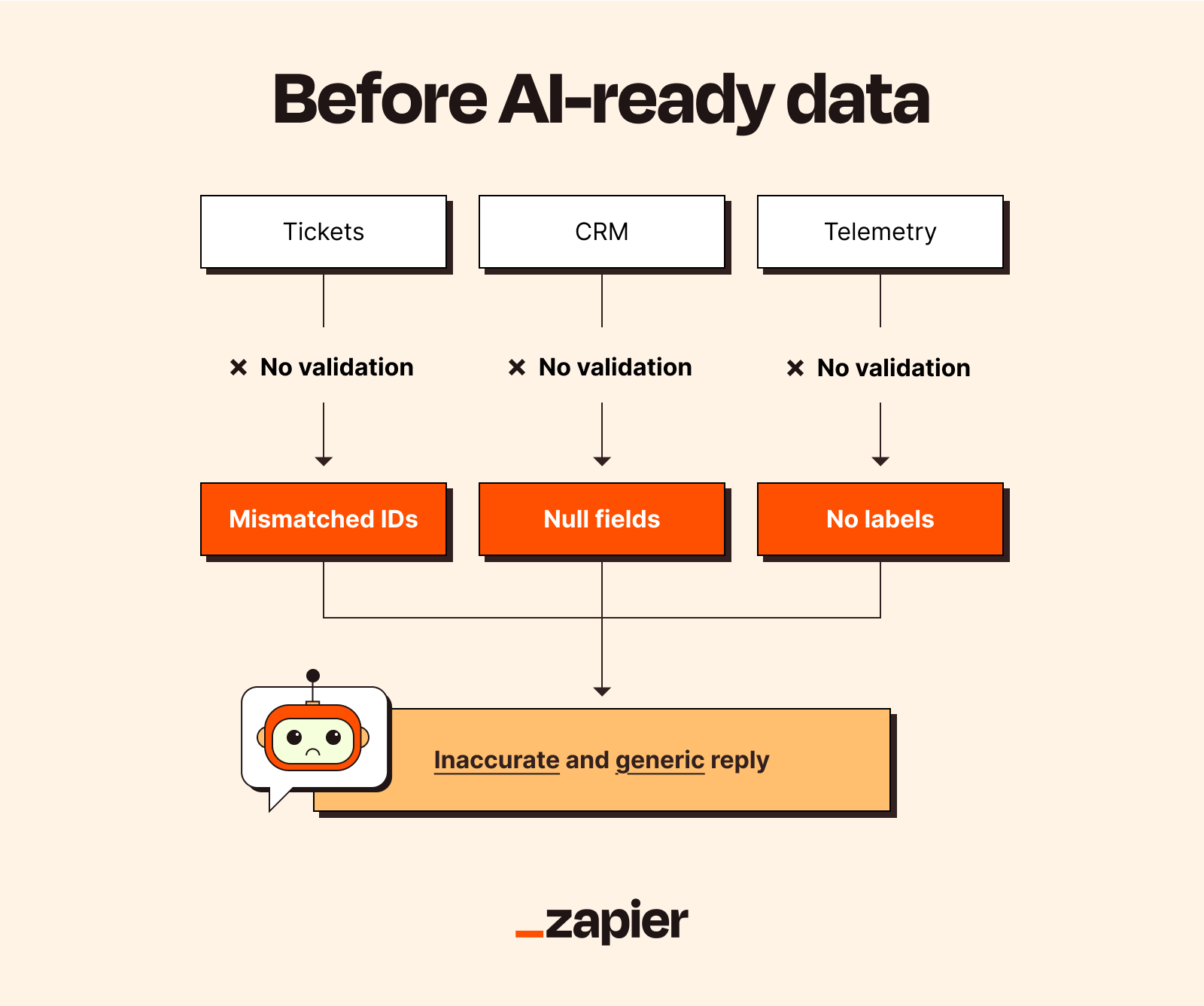

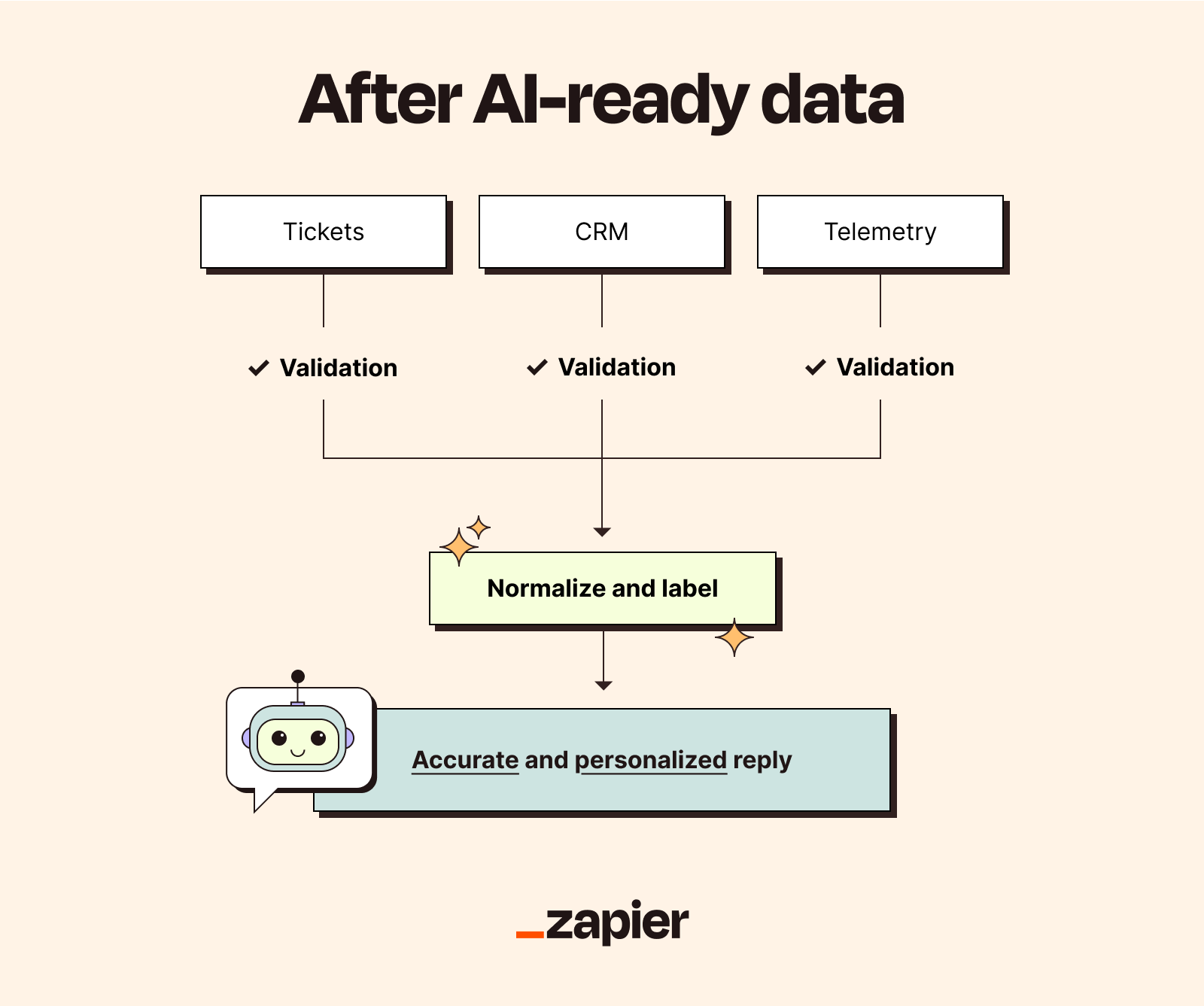

AI-ready data is about preventing that exact betrayal. A dataset can look tidy on the surface, but an AI readiness audit may reveal hidden fat (duplicates, label leakage, unit mismatches, and missing values). Data that’s full of inconsistencies, gaps, and formatting nightmares is the equivalent of full-fat yogurt in a non-fat container.

In this article, I’ll walk through how to audit the data you have, clean what you keep, and govern what you ship, so your AI models are trained on the good stuff.

Table of contents:

What is AI-ready data?

AI-ready data is clean, structured, and well-governed information that has been specifically prepared for immediate consumption by AI and machine learning models. Think of it as the difference between cooking with prepped ingredients and panic-dumping the pantry into a pot while hoping for soup.

Making data ready for AI isn’t just about having a lot of data. That’s like saying you’re ready to cook because you bought every item in the grocery store. It takes a well-managed data pipeline that includes collecting, storing, cleaning, and managing data.

AI-ready data prioritizes accuracy, consistency, and contextual relevance. It has to meet technical and governance standards for reliable AI performance. This means no missing information, no random formatting inconsistencies, and no duplicate entries. (AI doesn’t appreciate redundancy the way your drunk uncle appreciates repeating the same story at family gatherings.)

When you’re building your data workflows, use a tool like Zapier to automate the collection and initial processing of info from various sources. For example, you might use Zapier to automatically pull customer contact information from multiple platforms and standardize the format before it hits your data warehouse.

Unified lead capture

Easily channel leads from multiple sources into your CRM.

What makes data ready for AI?

The qualities that make data truly ready for AI go far beyond basic cleanliness. Here are some important differences between data that enables successful AI and data that sabotages it:

-

Quality: Your data must be free of typos, errors, and duplicate entries to make sure AI models are fed accurate inputs. Quality data is like a good friend. It tells you the truth, shows up when expected, and doesn’t repeat itself constantly.

-

Structure: While AI can read messy, unstructured data, it works much faster and more reliably with data that’s neatly organized. (Think of a well-labeled spreadsheet, not a ransom note.) A clean, consistent format makes it far easier for AI algorithms to detect patterns and produce high-quality results.

-

Context: A number by itself means nothing. The AI needs metadata (basically data about the data) to understand what it’s looking at. For example, is the number “30” a price, a customer’s age, or the percentage of users who actually finish onboarding before rage-quitting? This context is what links the data to your business objectives.

-

Governance and security: You don’t want your AI system running wild with sensitive customer info, or getting subpoenaed because you forgot to comply with, oh, I don’t know, every major privacy regulation ever. It’s important to implement proper access controls, track data lineage, and operate within ethical and legal boundaries.

-

Accessibility: You want your data to be organized and stored in a way that makes it easy for data scientists and AI systems to pull. It should be easy to get to, clearly labeled, and ideally not stowed away on someone’s personal desktop next to a folder called “divorce stuff.”

-

Relevance: Your data might be high-quality, well-structured, and perfectly accessible, but if it doesn’t match your AI use case, it won’t deliver value. Also, historical data that’s too old becomes irrelevant. Using shopping data from 1995 to predict current trends won’t work. People bought different things then—like floppy disks and common sense.

Why AI-ready data matters

Reading about data preparation makes C-SPAN at 3 a.m. look like a Michael Bay explosion sequence. But when your data is clean and ready for AI, you get a bunch of competitive advantages:

-

Enhanced decision-making: Well-governed datasets allow leaders to confidently act on AI-generated insights, improving operational efficiency and strategic planning.

-

Accelerated AI adoption: Companies with mature data preparation processes can deploy AI solutions faster and scale them more easily. Zapier workflows can speed up this process quite a bit. Instead of manually collecting and cleaning data for each new AI project, you can build automated pipelines that continuously prepare fresh, clean data.

-

Regulatory compliance: Regulators want to understand how your AI models make decisions. That requires tracing back through your data pipeline to show exactly what information influenced each prediction. With clean data, this is possible. Without it, you’re just guessing. And writing “because the AI said so” on regulatory forms doesn’t work. I’ve tried.

-

Risk mitigation: High-quality data reduces the likelihood of biased outcomes, model failures, and costly AI project abandonment. An infamous example is the Amazon AI recruitment tool that decided women weren’t suitable candidates for tech roles due to historical hiring bias in its training data. The AI didn’t wake up one morning and decide to be sexist—it just learned from data that reflected existing human prejudices and amplified them into something even worse.

How to prepare your data for AI

To make data AI-ready, you’ll need a structured process. This ensures the data you use to train and deploy models is clean, organized, relevant, and reliable.

1. Define clear objectives and assess readiness

Before touching any data, establish specific goals for your AI project. (No, “AI” is not a goal.) Are you trying to predict customer behavior? Automate customer service? Develop a recommendation system that’s better than Netflix’s algorithm? (Seriously, Netflix, I watched one gritty European crime drama and now you think I want to see every murder-adjacent documentary ever made. You’re not wrong, but still.)

Once you have a goal, conduct an AI readiness assessment to evaluate the data you’ve already got. This should answer a few simple questions:

-

What problem is this actually solving for the business?

-

Which data sources contain relevant information?

-

How clean does this data need to be for the AI to be useful?

-

Are we going to get in trouble (legally or otherwise) for using this data?

Figure out what “success” actually looks like early, and get stakeholders aligned so they don’t move the goalposts mid-sprint.

2. Blend and consolidate data sources

You’ll need to pull in all the data you need. This means grabbing everything that’s relevant, whether it’s the clean, organized stuff from your databases, data lakes, and data integration tools, or the messy, unstructured data like documents, emails, and audio files.

As you’re gathering it, you have to be picky. Make sure your data is:

-

Relevant: It should be directly related to the problem you’re solving. Irrelevant or low-quality data leads to poor model performance.

-

Diverse: Cover edge cases to make sure your data is robust and free of bias.

-

Representative: It should cover the full spectrum of conditions your AI system will face in production.

This process can be a huge pain, but tools can help. An AI orchestration platform like Zapier is great for pulling in and reformatting data from all those different sources.

Alternatively, use a dedicated ETL (extract, transform, load) tool to aggregate data. Modern data pipeline platforms like Apache Airflow, Databricks, and Fivetran have connectors already built in, which makes hooking up all your data sources much simpler.

3. Clean and standardize data

Messy data will give you messy (and often costly) results. This step is where you fix all that. It’s not one single task, but a few key housekeeping chores:

-

Remove duplicates: Find and remove redundant records that can skew analysis and slow processing.

-

Handle missing values: Decide what to do with missing information (fill it in, delete it, etc).

-

Manage outliers: Deal with wild outlier numbers (like a 200-year-old customer) that will skew your results.

-

Address inconsistencies: Standardize data formats, fix naming conventions, and make sure all data uses the same units and scales. NASA learned this the hard way when the Mars Climate Orbiter got lost in space because of unit confusion. Don’t be like NASA. Standardize your units.

AI can actually help you do all that before it ingests the final data. Implement automated data quality checks using AI-powered validation tools. These systems can continuously monitor data pipelines for quality issues, detect anomalies by learning patterns in your data, flag invalid entries automatically, and enrich data with context.

4. Reduce and optimize data complexity

Gathering data isn’t like collecting Pokémon cards, where the goal is to catch them all. You often get better, faster results by trimming the fat (unlike the “Seinfeld” yogurt) and forcing the AI to focus on what’s actually important. This means:

-

Selecting features: Figure out which pieces of data actually help the AI make a good prediction. If you’re predicting customer churn, data about your office plant isn’t helpful. Unless your customers are leaving because of the plant. Is it the plant? Maybe check the plant.

-

Combining similar data: Sometimes you have way too many columns that are all related. Apply techniques like principal component analysis (PCA) to compress them into one or two new columns that represent the same idea. PCA is like creating a greatest hits album for your data—you’re keeping the most important parts while reducing the overall volume to something more manageable.

-

Balancing data: Datasets shouldn’t be skewed toward particular outcomes. This introduces bias and reduces accuracy. If you’re training a fraud detection model and 99% of your data represents legitimate transactions, your model might simply learn to classify everything as “not fraud.” It’ll be right 99% of the time. But that 1% is expensive.

5. Govern, secure, and validate

Proper data governance ensures AI systems operate ethically, securely, and in compliance. It’s like having rules at a pool. No running. No diving in the shallow end. No feeding your data to unauthorized users.

This means you need to check that you’re following all the necessary privacy laws like GDPR or HIPAA. You’ll have to protect sensitive information using common tools like encryption, anonymization (stripping out personal details), and strict access controls so only the right people can see it.

It’s also critical to track where your data comes from, how it’s been changed, and which AI models are using it. This paper trail makes it much faster to find and fix problems and helps you prove to regulators that you’re doing things right.

Orchestrate your path to AI-ready data

Even the best AI tools are useless without a steady stream of high-quality data. But getting your data “AI-ready” can feel like a massive, never-ending chore where all the critical steps are exactly the kind of manual, error-prone tasks that slow projects down.

The good news is you don’t need to hire a team of engineers to build a complex, custom-coded pipeline. Use Zapier for data automation to orchestrate your own AI-ready workflows that can automatically fix formatting errors as they come in, validate new leads, and sync clean data across all apps. Plus, Zapier eliminates the need for manual software integrations by connecting with over 8,000 apps instantly. Learn more about how to use Zapier for data management.

Related reading: