Consumer AI is fun for the same reason novelty mugs are fun: the stakes are low, the consequences are negligible, and you can abandon the output the moment it stops amusing you. It will cheerfully hand you a recipe, an Instagram caption, or a vacation itinerary you will absolutely ignore, and everyone can walk away pretending that was time well spent.

When you bring that same casual energy into a professional environment, you don’t get a cute souvenir; you get AI workslop—that murky zone where AI spits out work that looks fine at first glance but turns into a time-sucking revision nightmare when you actually try to use it.

To find out where it hits hardest, we surveyed 1,100 enterprise AI users to uncover the reality of AI workslop. We dug into the specific friction points that turn a productivity tool into a liability, revealing how much time is actually being wasted on re-work, and why the gap between AI adoption and AI mastery is wider than most leaders realize.

Key findings:

What is AI workslop?

AI workslop is AI-generated output that looks polished and professional at a glance, but lacks the substance, precision, or context needed to meaningfully complete a task. Because it prioritizes speed and volume over accuracy and utility, AI workslop leads to time wasted on revising, validating, reformatting, re-prompting, and sometimes completely redoing, creating a layer of sediment that slows down every process it touches.

This phenomenon exploits the human tendency to treat easy-to-read, confident language (or neat-looking charts) as inherently true or useful. And large language models (LLMs), being probabilistic engines trained to predict the next likely word rather than to cultivate truth, are fantastic at generating text that feels correct without necessarily being correct. The result is outputs that mimic value while offering the practical utility of decorative fruit: glossy on the surface but fundamentally hollow, and absolutely nothing will be nourished by it.

Workslop colonizes the tasks that feel deceptively straightforward, including reports that sound legit but are devoid of actionable insights, data analysis that charts the wrong thing beautifully, research summaries that invent citations, lengthy meeting notes that capture discussions without decisions, and emails that are technically grammatical but have the emotional intelligence of an unfrosted Mini-Wheat.

58% spend 3+ hours/week revising or redoing AI outputs, but 92% still say AI boosts their productivity

More than 9 in 10 workers say AI makes them more productive, despite the small, inconvenient detail that most of them are spending substantial chunks of their week fixing AI’s mistakes. A full 58% spend three or more hours per week revising or completely redoing AI outputs.

That number worsens when you zoom in: 35% spend at least five hours, and 11% are burning through 10 or more hours every week. The average lands at roughly 4.5 hours per week—more than half a workday—just cleaning up after a tool that has been marketed, with straight faces, as time-saving.

Only 2% say they generally don’t need to revise what AI produces. Everyone else is revising to some degree—fact-checking, massaging phrasing, restructuring, correcting hallucinations—yet 92% still report that AI increases their productivity overall. Half say it significantly boosts productivity, and only 1% admit that AI actually makes them less productive.

The math here suggests that even with all the cleanup work, AI is still saving people more time than it costs them. Or maybe we’ve all just convinced ourselves that the emperor’s new clothes look fantastic because we’ve already committed to the wardrobe. Either way, the productivity gains are real enough to be widely felt.

Zapier’s AI orchestration platform lets you delegate work to AI agents that can take action in all your apps—saving time, reducing errors, lowering costs, and unlocking new strategic opportunities. And when you want a little more human control, Zapier Workflows are there to help you connect your entire tech stack in a deterministic fashion.

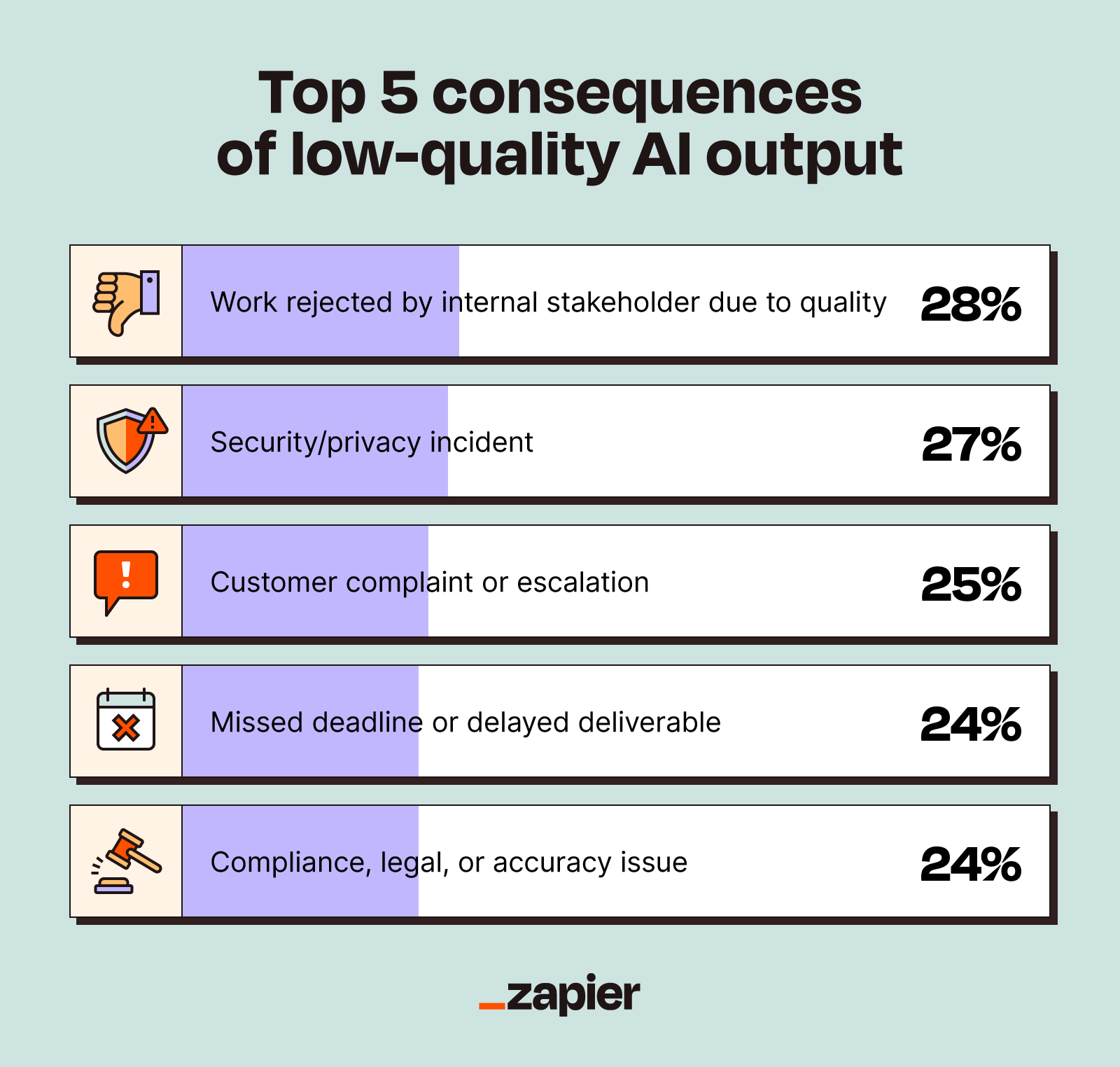

74% have experienced at least one negative consequence from low-quality AI outputs

If workslop were just annoying, we’d all complain, rewrite the doc, and move on. The problem is that it’s not just annoying. It’s risky. Nearly three-quarters of respondents (74%) have experienced at least one negative consequence from low-quality AI outputs, with the most common being:

-

Work rejected by an internal stakeholder due to quality issues (28%)

-

Privacy or security incident (27%)

-

Customer complaint or escalation (25%)

-

Missed deadline or delayed deliverable (24%)

-

Compliance, legal, or accuracy issue (24%)

Unsurprisingly, there’s a correlation between heavier workslop and more serious fallout. Workers who spend five or more hours per week fixing AI workslop are more than twice as likely to report lost revenue, lost clients, or lost deals compared to those spending less time on cleanup (21% vs. 9%). They’re also more likely to suffer damaged professional credibility (25% vs. 18%).

So the people working hardest to make AI work and spending the most time fixing its mistakes are the ones getting hit hardest by the ramifications when something slips through. It’s like being punished for trying.

AI workslop intensity, meanwhile, is not evenly distributed. It varies dramatically by function, tracking closely with both how embedded AI is and how costly errors can be.

Engineering, IT, and data roles average five hours each week fixing AI outputs, with 44% spending five-plus hours on it. Worse, 78% report at least one negative consequence from AI outputs. Finance and accounting teams manage to have it even worse in certain respects. They average 4.6 hours per week, with 47% hitting the five-hour mark, and they post the highest rate of negative consequences at 85%. This makes a bleak sort of sense. When your work is technical or financially sensitive, errors aren’t “oops” moments—they’re auditing events, compliance nightmares, and deeply unfun conversations.

By contrast, sales and customer support teams experience lower AI workslop—averaging three hours per week, with only 13% spending five-plus hours—though even there, 62% still report at least one negative consequence.

Even in customer-facing teams with lower average cleanup time, consequences are common. A single off-brand, incorrect, or overpromising customer message can create escalations that erase whatever time you saved drafting it. At least customers are famously chill about being misinformed.

|

Function |

Average time spent fixing AI/week |

% spending 5+ hours/week |

% reporting at least one negative consequence |

|---|---|---|---|

|

Engineering, IT, and data |

5 hours |

44% |

78% |

|

Finance and accounting |

4.6 hours |

47% |

85% |

|

Business development |

3.9 hours |

29% |

65% |

|

Operations and supply chain |

3.9 hours |

25% |

59% |

|

Human resources |

3.8 hours |

26% |

73% |

|

Product development |

3.8 hours |

21% |

76% |

|

Legal |

3.6 hours |

20% |

70% |

|

Marketing |

3.3 hours |

22% |

78% |

|

Sales and customer support |

3 hours |

13% |

62% |

|

Project management |

2.8 hours |

16% |

69% |

If your leadership is on board but you’re worried about handing the AI reins to non-technical users, Zapier’s no-code approach to AI automation can help. It lets business teams build and orchestrate workflows without sacrificing security and governance or having to ping IT for every minor tweak.

Data analysis, not writing, is the biggest source of AI workslop

Public discourse has been obsessed with AI writing—emails, blog posts, marketing copy, the stuff that feels like AI’s whole brand—but it turns out the real workslop champions are knowledge-dense tasks that require precision and context, edging out the more obvious suspects.

Data analysis and creating visualizations top the list, with 55% saying these tasks require the most cleanup or rework, which is an astonishing outcome if you’ve ever been told that “AI is great with numbers.” Research and fact-finding follow close behind at 52%, with long-form reporting also sitting at 52%.

By comparison, only 46% cite writing emails or customer communications as major workslop zones, and 44% say the same about marketing or creative content. So while we’ve all been arguing about tone and voice and whether a machine can produce a politely upbeat paragraph without giving itself away, AI has been quietly inventing data, making up research citations, and creating charts that look beautiful but are based on absolutely nothing.

Only 3% say AI outputs rarely need correction, which means 97% of us are revising to some degree. But if you’re using AI for anything involving numbers, facts, or research, you’re spending the most time correcting slop. It’s as though AI looked at the tasks where accuracy matters most and thought, “Excellent, these are the ones I shall be wrong about.”

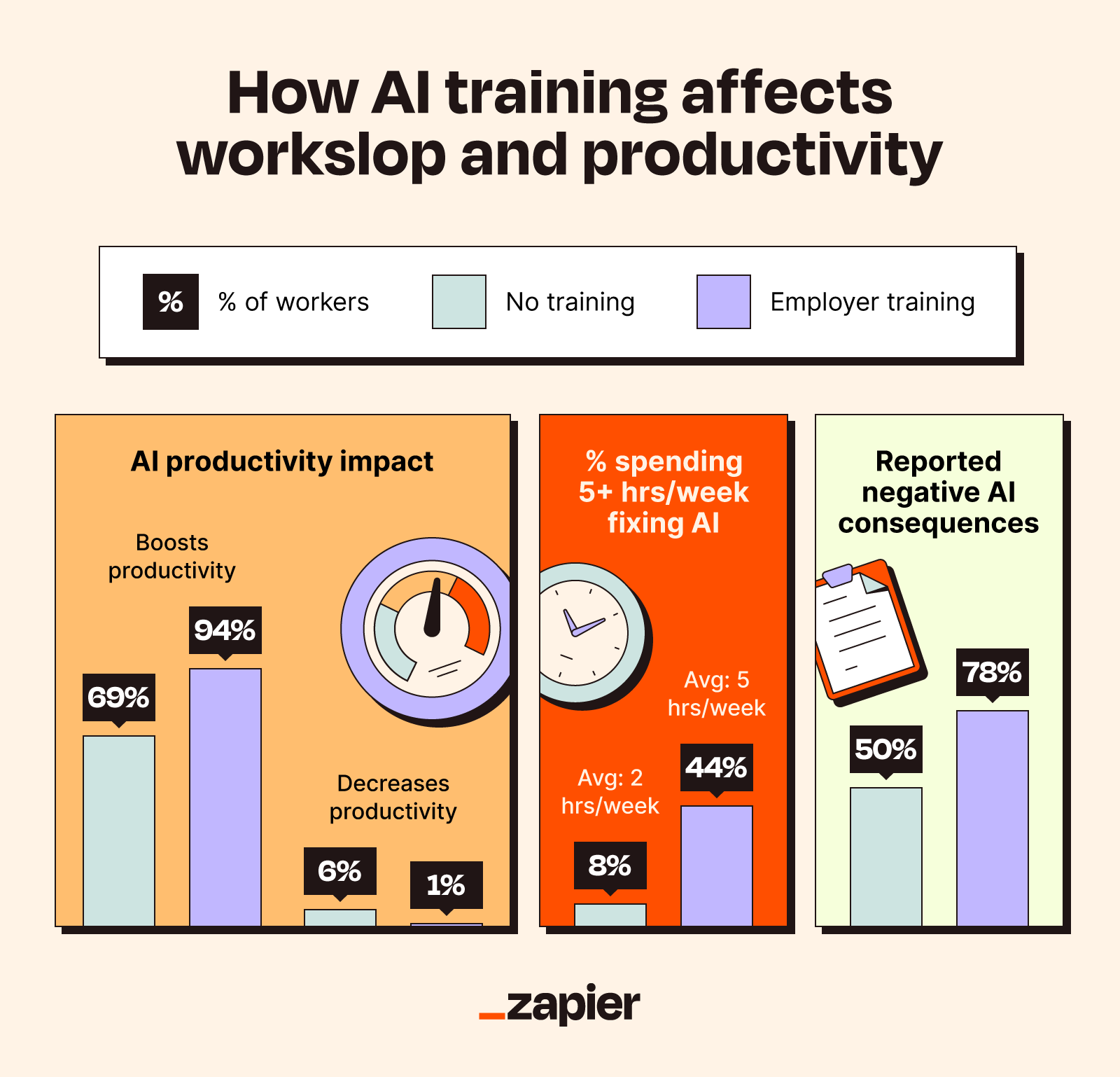

Untrained workers are 6x more likely to say AI makes them less productive

If you want to reduce AI workslop (while keeping whatever productivity gains you’ve managed to wrestle from it), the fastest, most reliable first move is to train employees on how to use and verify AI outputs, not just turn on ChatGPT Enterprise and pray that “innovation” happens.

Among workers who haven’t gotten AI training, 69% say AI boosts productivity (compared to 92% overall, remember), and a full 6% say AI actually decreases their productivity. They spend an average of just two hours each week fixing AI outputs, with 8% crossing the five-hour threshold. They also report way fewer negative consequences—only 50% have experienced one.

In contrast, workers who received employer-provided AI training have a fundamentally different experience—one with more upside and, inconveniently, more wreckage. Here, 94% report productivity gains, and only 1% claim AI negatively impacts productivity. However, this group spends significantly more time on revisions. Trained employees average five hours a week on cleanup (more than double the untrained group), and 44% spend five or more hours revising. Plus, 78% say they’ve run into at least one negative consequence.

Trained workers are spending way more time with AI, way more time fixing it, experiencing way more consequences…and they’re still dramatically more likely to say it’s worth it and it’s making them more productive. They use AI more aggressively, more frequently, and, crucially, in higher-stakes contexts where cleanup is required. (You don’t spend five hours a week correcting AI if you only ask it to rewrite subject lines.)

Meanwhile, untrained folks’ low cleanup time isn’t evidence of superior outcomes so much as evidence of limited exposure. They barely use AI at all, or they use it so ineffectively that they haven’t even gotten to the point where they’re using it enough to break things. They’re like someone who bought an Instant Pot and only uses it to store bananas because they’re too intimidated to figure out the buttons.

97% of workers with access to AI orchestration tools say AI boosts productivity

If training is the first pillar, the second is infrastructure. Specifically, AI orchestration, templates, context, and QA.

When workers have access to AI orchestration tools, the productivity lift is at its most emphatic—97% say AI boosts productivity. Those propped up by prompt templates, libraries, and ongoing training trail only slightly at 95%. Remove all of that—no training, no guidelines, no libraries, no orchestration—and only 77% say AI helps. Still a majority, yes, but also a 20-point drop that’s hard to hand-wave away.

Context matters just as much as tooling. Respondents with access to AI-ready company context—internal documentation, brand voice guides, product fact sheets, project templates—spend more time fixing AI outputs (about five hours a week) yet sustain a remarkable 96% productivity boost rate. Those with minimal or no structured context spend less time fixing AI (closer to three hours), but their productivity sentiment sinks to 82%.

Formal quality assurance processes show the same trade-off. Teams with formal QA, whether that means a documented SOP, a dedicated QA role, manager review, or automated guardrails, average roughly five hours a week fixing AI outputs while maintaining 93% productivity sentiment. Teams with no formal review process average about three hours, with 88% saying AI boosts productivity.

The point isn’t that support systems eradicate workslop. It’s that they keep it from being a net loss. They convert AI from a vague, high-variance experiment into a managed process—one where the extra cleanup is the cost of doing more meaningful work faster, rather than the cost of pretending you are.

How to cut AI workslop and keep productivity gains

AI workslop is not a moral failure. It’s an operational problem. And, as with most operational problems, it gets better when you build a boring, repeatable system around it.

Here are six ways to reduce workslop without relinquishing the productivity gains that 92% of workers are already reporting.

1. Treat AI training as mandatory, not optional

If you rolled out AI tools at your company without providing real training, you failed. You failed your employees, you failed basic change management, and you’re almost certainly failing to extract the ROI you so boldly promised in the kickoff deck. The survey makes this clear—workers with no training are six times more likely to say AI actively harms their productivity (6% vs. 1%) and way less likely to see AI as a net gain (69% vs. 94%).

Offer structured AI training. Teach prompting techniques, yes, but also output evaluation, risk scenarios, appropriate and inappropriate AI use cases, and workflow integration. And then offer ongoing refreshers, because this stuff changes constantly, and also people don’t become good at AI review by absorbing a slide deck. They get better by practicing, receiving feedback, and adjusting their habits, which is, unfortunately, how learning works.

Approach AI training like any other critical system rollout, not a casual 20-minute “lunch and learn” that people can skip if they have a conflict. Make it mandatory, track completion, and tie it to performance expectations if you must resort to the blunt instruments of accountability. Don’t just make it available and hope people opt in, because they won’t (and then they’ll be part of that 6% who think AI is making their job worse, and they’ll be right because you didn’t teach them how to use it).

This is the easiest, highest-impact thing you can do, and if you’re not doing it, stop reading and go schedule a training.

2. Build AI-ready context into your tools

A bonkers 96% of respondents with access to comprehensive, up-to-date context say AI boosts their productivity (versus 82% with minimal or no context). AI is only as good as the information it has access to, and context is the difference between a tool that feels oddly competent and one that produces the sort of generic output you could receive from any sufficiently confident autocomplete engine.

Standardize what “AI-ready context” means for your organization, the way you would standardize any other critical input you expect people to rely on at scale. Define the things the AI model shouldn’t have to guess, like approved terminology, policies and AI compliance requirements, customer promises, and data boundaries.

Package that context into reusable inputs rather than leaving it as institutional knowledge and scattered links. This can include things like customer personas, brand voice documentation, FAQ libraries, and role-specific guardrails. (Sidebar: you should have these anyway because they’re useful for humans, too.)

Finally, feed these knowledge sources into your AI workflows using a tool like Zapier, because the weakest link in any system is the part that relies on a human remembering to do the right thing every single time. If the model needs context to be useful (and it does!), then your infrastructure should deliver that context by default. Otherwise, you’re not building a process; you’re building a superstition, where good outcomes depend on whether someone remembered to paste the sacred paragraph before hitting enter.

3. Formalize review flows for high-risk AI output

With three-quarters of respondents experiencing at least one negative consequence due to AI workslop, “just eyeball it” isn’t a legitimate review strategy. Individual diligence is lovely in the way hand-sewn quilts are lovely, but you can’t scale it, audit it, or rely on it when the stakes involve regulated data and lawyers. So, consider this your official cue to stop framing AI output review as a suggestion and start treating it as what it actually is: a workflow.

Determine which AI outputs require review. Start where consequences are sharpest—anything customer-facing, regulated, or financial—and assume, until proven otherwise, that “looks professional” is not a quality bar but a symptom of workslop.

Then, establish a simple, explicit approval path that imposes just enough friction to prevent catastrophe: checklists that force verification, designated approvers, or dedicated QA staff whose sole purpose is catching AI mistakes before they metastasize. Make sure any human role is explicit, assigned, and visible, not an implied duty that evaporates the moment everyone is busy.

We’re not introducing bureaucracy for the sake of process theater. The goal is to prevent expensive mistakes that can erase whatever productivity gains you might be celebrating. With QA, you still get the speed of AI, but you stop paying for it in downstream rework, reputational harm, and the kind of incidents that lead to a meeting titled “Lessons Learned.”

4. Monitor “workslop” as an actual metric

The average worker spends 4.5 hours a week cleaning up AI outputs, and the heavier the workslop, the more frequently it drags real business consequences behind it like tin cans tied to a bumper. It deserves to be tracked with the same sober attention you’d give to latency, churn, or any other operational expense.

Ask your teams two simple questions:

-

How many hours per week are you spending revising, verifying, correcting, or redoing AI outputs?

-

What types of errors do you see most often?

Use the answers to prioritize changes—process tweaks, better prompt templates, improved context, orchestration, review gates, training refreshers, tooling changes, etc. Then, track the metric over time so you can tell whether your interventions are working, instead of relying on anecdotes and the sudden absence of complaints as your key performance indicator.

Use the metric to show improvement. For example: “We were spending an average of five hours per week on AI cleanup in Q1. We implemented better training and context systems, and now we’re down to three hours in Q2.”

That’s proof that your AI infrastructure investments are working and, more critically, it’s a story you can tell leadership. But you can’t improve what you don’t measure, and right now most companies aren’t measuring workslop at all—they’re just experiencing it, suffering through it, and wondering vaguely why their AI initiatives aren’t delivering the productivity gains they expected.

Zapier brings together all your AI data in one place so you can actually measure ROI. By adding AI directly into the tools and workflows your team already uses, Zapier helps move AI from one-off tests to coordinated processes that improve work, measurably, across the business.

5. Focus on your highest-risk tasks and teams first

Resist the urge to treat AI governance like an indiscriminate gardener, flinging fertilizer with abandon in the hope that something, somewhere, might flourish. Instead, concentrate your efforts where errors are most costly and most common.

The survey shows clear hotspots: data analysis, research, and long-form reporting are the top workslop tasks, while engineering, IT, data, and finance departments face the most intense cleanup work.

Tighten workflows and guardrails in those specific areas. Start with your engineering team’s data workflows, or your finance team’s reporting processes, or whatever your specific highest-risk area is. Set clear acceptance criteria for AI-generated work, mandate review for risky outputs, confirm data sources, redo key calculations, and flag anything that needs clarification. Use templates to shape output and orchestration to guide the process, rather than trusting folks to reinvent every process on the fly.

The idea isn’t to boil the ocean—it’s to stop it from boiling you. Figure out where the risk is highest, then expand outward. You’ll get more impact faster, prevent the most costly mistakes, and build learnings you can apply in other areas later.

6. Give workers better templates, not just more tools

Nearly everyone (95%) says they are more productive when they have access to prompt libraries and ongoing training, which is a rather pointed reminder that the objective is not to carpet-bomb your organization with AI tools and then call the resulting confusion “innovation.” Instead of deploying yet another app, give your team a shortcut to getting decent results from the ones they already have.

Create prompt templates for the tasks most prone to workslop, such as data analysis, research briefs, executive reports, and customer responses. These templates should constrain the model into producing the kind of output that can be reviewed, verified, and shipped without an editor’s blood pressure spiking.

Additionally, develop comprehensive AI playbooks detailing the entire workflow: when to use AI, when not to, what review is required, how the output is validated, and what “ship-ready” actually means in that context.

Bake verification into the workflow rather than hoping it arrives spontaneously. Have the model list its assumptions, outline its reasoning, show calculation steps, flag uncertainties, and provide alternatives. Make sure it shows its work by asking what sources it used, what conclusions it drew, and what info it couldn’t verify. Force the machine to expose the scaffolding instead of presenting you with a finished facade and daring you to find the load-bearing faults by touch.

Finally, use Zapier to standardize execution, because consistency cannot be achieved by gently asking everyone to “please follow the playbook” and then acting surprised when they don’t. Zapier can encode patterns into workflows for recurring tasks (report generation, email drafting, meeting notes, analytics summaries, etc.) so the right context is pulled in, the right prompt is used, the right formatting is applied, and the right review step happens before anything escapes into the world.

Zapier, the most connected AI orchestration platform, can help you get over the AI integration gap. With more than 8,000 integrations across the tools you already use, Zapier can securely bring AI to all your workflows and set you up for a future where enterprise-wide AI is a must instead of a “nice to have.”

Curb AI workslop with Zapier

AI workslop is what happens when AI adoption outpaces the workflows needed to make it reliable. On paper, the story looks great: 92% of workers say AI boosts productivity. And yet, the average employee still spends more than half a workday every week repairing AI outputs, and 74% report having experienced at least one negative consequence.

The solution is better infrastructure, not fewer tools. Zapier helps teams make that shift by providing an orchestration backbone to standardize how AI is used across the organization. Connect your AI tools directly into your existing workflows and knowledge sources, reuse proven templates, route high-risk outputs through approvals, and automate the handoffs that make AI usable at scale. Build reliable orchestrations once and deploy them everywhere, so you get consistency, governance, and measurable improvement rather than a thousand slightly different experiments and a shared sense of exhaustion.

Start building AI-powered systems that mitigate workslop, so you can enjoy the productivity gains without also signing up for the weekly ritual of cleaning up after them.

The survey was conducted by Centiment for Zapier between November 13 and November 14, 2025. The results are based on 1,100 completed surveys. In order to qualify, respondents were screened to be U.S. AI users who work at companies with 250+ employees. Data is unweighted, and the margin of error is approximately +/-4% for the overall sample with a 95% confidence level.

Related reading: