Let me guess: your business has data everywhere. It’s in your CRM, your marketing platform, your support desk. But getting that data to talk to each other is about as enjoyable as eating your own teeth.

If that sounds familiar, you’re in good company. Most businesses are drowning in data silos and wasting hours manually copying data between systems, fixing errors, and waiting for reports that should update automatically.

Data orchestration is a process that turns manual, error-prone tasks into seamless, automated workflows, giving you clarity where there used to be confusion. And Zapier makes this possible even for teams without technical expertise, connecting your entire tech stack and orchestrating data flows with a no-code interface.

If your organization has more data than a hamster has cheek space, someone needs to make sense of it all. That someone could be you if you keep reading this article.

Table of contents:

What is data orchestration?

Data orchestration is the automated process of managing the flow of data across various systems, tools, and workflows within an organization. It involves collecting, transforming, standardizing, and synchronizing data to make sure it lands in the right place, at the right time, and in the right format for your team to use.

Business data often lives in more places than a wealthy person’s money. You’ve got customer information in your CRM, sales data in a spreadsheet, and inventory numbers in your ERP system. Data orchestration takes all these siloed fragments, cleans them up, and delivers them wherever they need to go.

Without orchestration, your data processes might involve:

-

Exporting customer data from your CRM

-

Cleaning it in Excel

-

Importing it to your analytics tool

-

Creating a report

-

Emailing it to stakeholders

This happens weekly, if you’re lucky.

With an orchestration tool like Zapier, this entire process runs automatically. Data flows from your CRM to your analytics platform, it gets cleaned and enriched along the way, reports update in real time, and stakeholders get notifications when new insights are available. It’s like a very boring Santa Claus who delivers data instead of gifts and holiday cheer.

And just so we’re clear, data orchestration isn’t the same thing as data pipeline orchestration. While data orchestration is the overarching concept, data pipeline orchestration specifically focuses on managing the sequential steps, or “tasks,” within data workflows.

Core components of data orchestration

To understand how orchestration works in practice, it helps to break it down into the key aspects that keep data accurate, connected, and usable:

-

Automation replaces manual tasks with programmed workflows. When new data arrives, automation springs into action like my dog hearing a bag of shredded cheese being opened.

-

Data management handles moving data from point A to point B, changing its format, and making sure it’s not garbage. Quality checks happen here, too, so you don’t accidentally make a million-dollar decision based on a typo.

-

Workflow management controls the sequence and timing of tasks. You can’t analyze data before you collect it, just like you can’t eat a cake before you bake it (unless you eat raw cake batter, but that’s not recommended by health professionals or data scientists).

-

Integration connects different systems and tools. Your data lives in many places—databases, APIs, SaaS apps, a new cloud service that was invented last Tuesday.

-

Monitoring and error handling watches everything and panics appropriately when things go wrong. This component tracks every task, detects any failures, alerts the right people, and often fixes issues automatically.

Zapier handles all of this for you. You just need to tell it what to do, and it takes care of the rest.

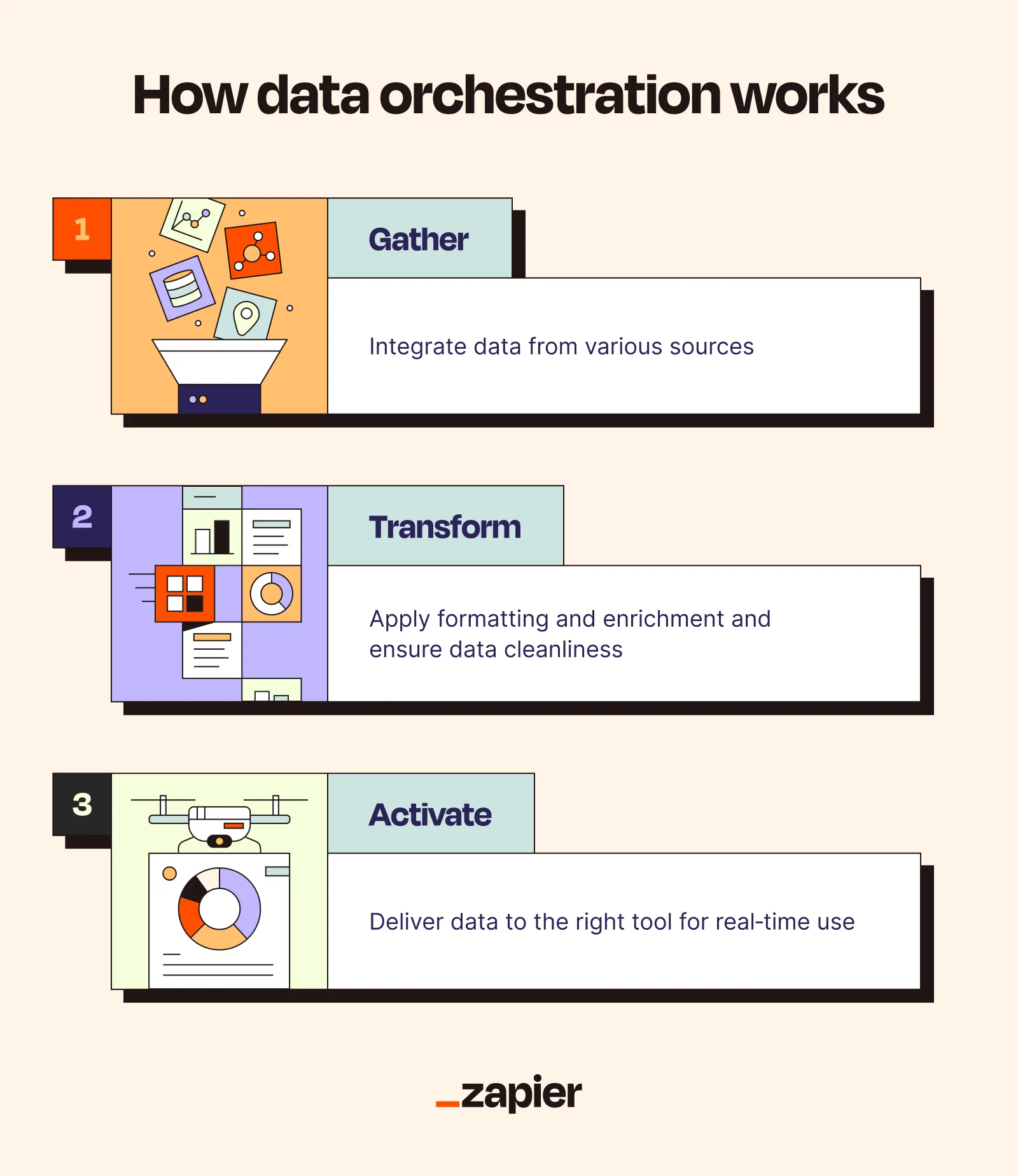

How does data orchestration work?

Data orchestration works in three main steps:

1. Gather

The first challenge is always getting your data in one place. The orchestration platform connects directly to all your data sources and pulls everything together. With Zapier, that means pulling from 8,000+ apps (plus private apps via webhooks) so you can connect every system you rely on.

In practice, this might mean connecting to your customer support tool for ticket information, your Shopify store to get order data, and your email marketing platform for campaign metrics. Each system has its own API, data format, and quirks.

Your data orchestration tool is designed to automatically navigate the unique API, formatting, and authentication rules of each system. Whether it’s a date formatted as MM/DD/YYYY in one place and YYYY-MM-DD in another, the platform handles these differences automatically. This process gives you a single, consistent dataset ready for the next step.

2. Transform

The transformation process is essential because raw data is like an uncooked potato—theoretically edible, but nobody wants to consume it. This step involves:

-

Cleaning up errors, duplicates, and other garbage data (contrary to popular belief, you don’t actually have half a dozen customers named “Test Test”)

-

Converting everything into a consistent format (names are structured the same way, phone numbers lose their creative punctuation, etc.)

-

Enriching the data with additional information where possible (job titles, company info, or whatever else might be useful)

-

Flagging suspicious data, like customers who claim to be 200 years old or live at 221B Baker Street.

3. Activate

Finally, the orchestration tool automatically pipes this processed data to wherever it’s actually needed, whether it’s for business intelligence, dashboarding, machine learning, or other analytics apps.

In practice, this could mean automatically adding a customer to a “new buyers” segment in your email tool the moment they make a purchase, refreshing executive dashboards with the very latest sales metrics, or triggering a personalized follow-up from a sales rep as soon as a potential customer visits your pricing page.

Activation is a continuous process. It’s not a one-time thing like getting your wisdom teeth extracted. It’s more like breathing—constant, automatic, and very bad if it stops.

Why data orchestration matters

Data orchestration matters because otherwise you’re stuck in data purgatory, manually moving numbers around like it’s 1998 and you’re working in Lotus Notes. Here’s what you actually get out of it:

-

Reduced manual effort: Remember that scene in “The Office” where Kevin drops the giant pot of chili? That’s what it feels like trying to manually move and organize data. Orchestration eliminates all that tedious copying, pasting, reformatting, and inevitable crying when you realize you’ve been working on the wrong version for six hours.

-

Better data quality and consistency: When humans handle data manually, errors creep in—files get corrupted, steps get skipped, and different people apply different standards. Orchestration enforces consistency. When human error is removed from routine processes, error rates drop from “embarrassing” to “acceptable.”

-

Faster time to insight: Instead of waiting days or weeks for data to be compiled and analyzed, orchestration gives you insights quickly, sometimes in real time.

-

Improved collaboration: When data processes are automated and reliable, different teams can work with the same information. Engineers don’t bottleneck analysts, analysts don’t hold up business decisions, and everyone has access to the same clean, reliable data without the classic “well, my numbers show something different” argument.

-

Better compliance and security: Orchestrated data flows can enforce security measures and ensure regulatory compliance. Everything flows through monitored, secured pipelines instead of data scattered across 14 personal Dropbox accounts and a thumb drive from 2013.

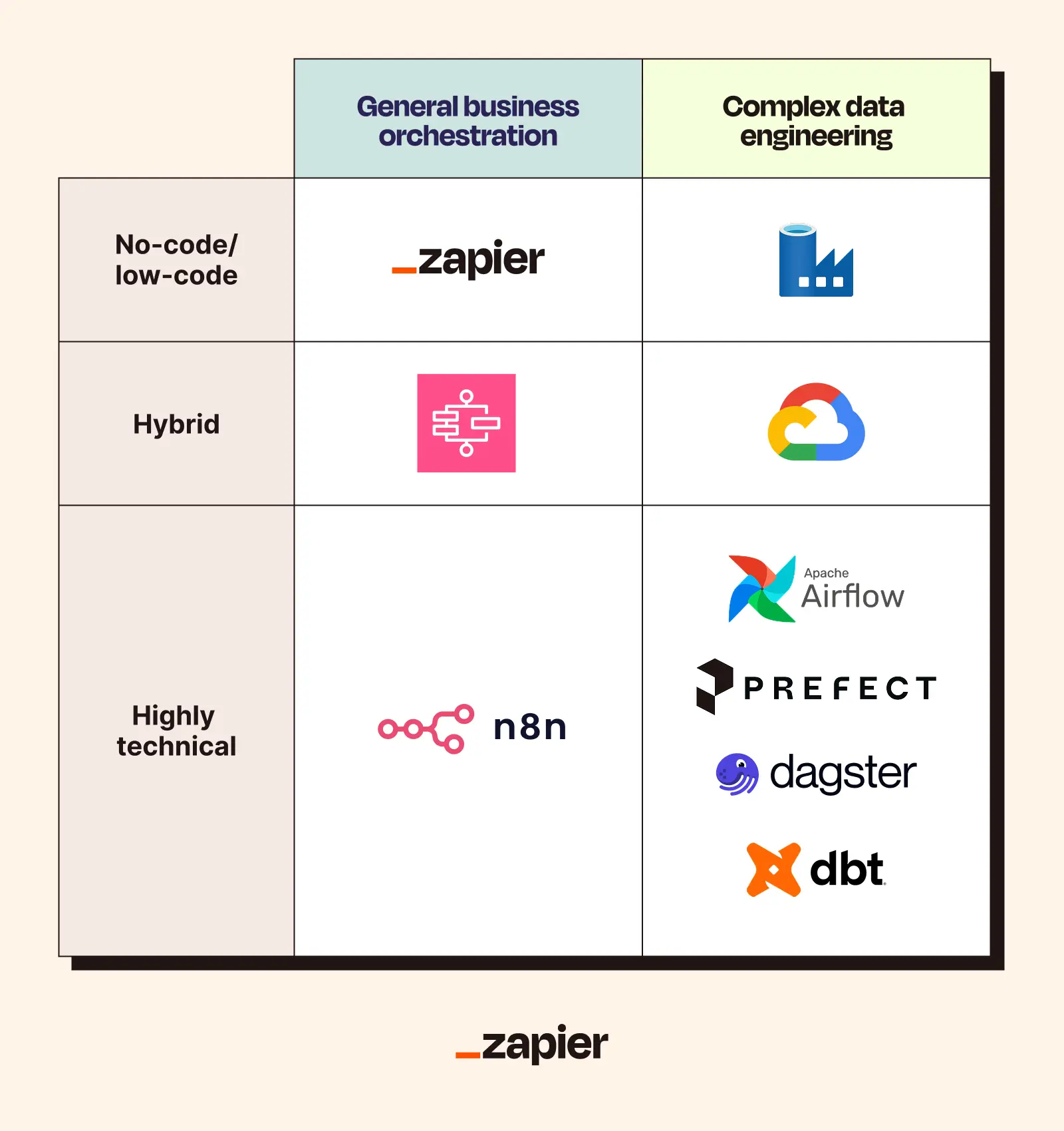

Popular data orchestration tools

Here are some popular data orchestration tools—some for the code-curious, some for the “please never make me open Terminal” crowd.

-

Zapier: Zapier is an AI orchestration platform, built for non-technical teams. It connects with over 8,000 apps, making it easy to move data across business software. Zapier also includes built-in AI features to transform and summarize data on the fly, so you can clean and enrich information as it moves. It’s perfect for enterprise teams that need results quickly without IT involvement.

-

Apache Airflow: Developed by Airbnb, this is an open source platform that lets you author, schedule, and monitor workflows using Python. This is the serious, grown-up option that actual data engineers use.

-

Prefect: Prefect is also open source and Python-based, but it’s designed to be more user-friendly and flexible. It handles dynamic workflows better than Airflow.

-

Dagster: This is another open source, Python-based framework that takes a slightly different approach by focusing on data assets rather than just tasks. Dagster implements a data-first approach with built-in quality checks.

-

AWS Step Functions: If you’re already deep in the Amazon Web Services (AWS) ecosystem, Step Functions lets you coordinate multiple AWS services into serverless workflows.

-

Azure Data Factory: Microsoft’s cloud-native orchestration offering lets you create data-driven workflows for orchestrating and automating data movement and transformation.

-

Google Cloud Composer: This is Google’s managed Apache Airflow service. If you’re a Google Cloud devotee, this gives you Airflow without the hassle of setting it up yourself.

-

dbt: While not strictly an orchestration tool on its own, it’s become a popular part of the modern data stack for handling transformations.

Best practices for orchestrating data

Here’s how to orchestrate data without making the mistakes everyone else makes.

Start with clear objectives

Don’t think about tools or workflows yet. Just finish this sentence: “We’ll know this is working when…”

A great answer is something specific, like, “…our monthly sales report is waiting in every stakeholder’s inbox by 9 a.m. on the first of the month.”

When you start with a clear, measurable goal like that, you make much smarter decisions. When you don’t, you end up with a complicated, over-engineered system that doesn’t actually help anyone.

It’s the same reason you don’t go grocery shopping without a list—because if you do, you’ll come home with six boxes of Pop-Tarts, a rotisserie chicken, and zero of the ingredients you needed for dinner.

Design modular workflows

Avoid writing monolithic, tangled pipelines that no one can understand or maintain. Instead, get into the habit of breaking large processes into smaller, single-purpose tasks that can be combined in different ways.

For instance, with a customer data workflow, don’t build it as one massive workflow. Create a separate module for data extraction, cleaning, enrichment, and loading. These modules can be tested independently, and there’s a good chance you can reuse one of them in three other projects.

Modular design makes troubleshooting easier. When a task fails, you can isolate the problem quickly instead of rifling through thousands of lines of code. It’s like Christmas lights where one bulb doesn’t take down the whole string (finally).

Ensure visibility and transparency

Your first priority should be visibility. Adopt tools that provide dashboards and lineage tracking (where data came from and where it’s going). You need to see what’s happening in your data workflows, especially when something breaks.

A good orchestration system will give you transparency tools that:

-

Show you where in the workflow the problem happened.

-

Keep a history of past runs so you can spot trends.

-

Send alerts before the problem becomes catastrophic.

Zapier, for instance, provides comprehensive admin controls and activity logs, so team leaders can monitor all automated workflows, manage user permissions, and track data flow across the organization. This level of transparency builds trust. It’s like the difference between a magic trick and a science experiment. When people can see how data flows, they trust the results more.

Govern data quality

This is the part where I scream: DO. NOT. TRUST. YOUR. DATA.

Bad data is worse than no data, like how wrong directions are worse than being lost. So, build validation rules and error handling directly into your orchestration workflows.

Check your data at the source. Make sure emails are valid, sales amounts fall within expected ranges, and you aren’t passing along empty fields. Don’t offload your data integrity problems to other systems; own it in your workflow.

Zapier Formatter and Paths can help here. Use Formatter to standardize data formats, and use Paths to route data differently based on quality checks.

Prioritize scalability

If you’ve ever tried to stuff yourself into jeans from high school, you already know why scalability matters. What works for 10,000 records may crumble at 10 million.

On-premises solutions require manual capacity planning. Unless you have a cogent reason to manage your own hardware, choose a cloud-first architecture that can scale with you. That way, you don’t have to rebuild everything from scratch when your customer base suddenly grows tenfold (a problem I personally have never experienced).

Security should be non-negotiable. Look for platforms like Zapier that offer enterprise-grade security features like data encryption, robust access controls, and is GDPR, SOC 2 Type II, and CCPA compliant.

Also, plan for both data volume growth and workflow complexity growth. Today’s simple task might be tomorrow’s intricate pipeline with all sorts of branches and error handling paths, so build in flexibility from the start.

Choose the right tools for your team

Picking the wrong orchestration tool is exactly like the time my wife came home with a Nespresso machine instead of a coffee maker. I was expecting a basic Mr. Coffee situation—not a tiny caffeine bazooka that produces one (1) ounce of extremely aggressive brown water at a time and comes with a noisy milk frother attachment that scares the dog.

Sure, the Nespresso technically made coffee, but it wasn’t the right coffee maker for us. And every morning, as I drink thimbleful after thimbleful while hoping I don’t vibrate into another dimension, I stare into the middle distance, ruing the day she dragged that cursed Nespresso into our lives.

That’s what it’s like to get locked into the wrong data orchestration platform. Remember that the goal is to reduce bottlenecks, not create new ones by implementing a system no one understands, even though it’s the most technically impressive option on the market.

When choosing software:

-

Pick something that fits your team’s skill level.

-

Make sure it integrates with the systems you already use.

-

Check the pricing model so you’re not selling plasma to cover API overages.

If you’re new to orchestration, a no-code platform like Zapier can get you quick wins without forcing you to read 900 pages of Python docs or know what a Kubernetes is.

Getting started with data orchestration using Zapier

The best way to understand data orchestration is to start using it. Zapier makes this easy, even if you’ve never built an automated workflow before. It lets you connect the tools you already use and orchestrate entire multi-step workflows, all without touching code.

Try Zapier for free and give yourself the gift of never manually exporting another CSV again.

Related reading: