I use camelcamelcamel whenever I’m planning to buy an expensive product from Amazon. It uses web scraping to track product prices and notifies you if a price drops below a specified amount.

But web scraping is for more than getting a deal on something you definitely don’t need anyway. I’ve been using web scrapers recently while vibe coding—they help me get more out of the massive amount of data that exists on the internet. And especially when combined with Zapier, web scraping can add a lot of context and intelligence to your business workflows.

So let’s take a look at how web scraping works, which web scraping tools you should use, and how you can get started.

Table of contents:

What is web scraping?

Web scraping is when automated programs or crawlers visit websites, extract specific data, and save it in a structured format for you to use somewhere else.

It’s not a new technology. The World Wide Web Wanderer, which lots of folks consider the first web scraper, came about in 1993, just a few years after the web itself. But now it’s a lot more sophisticated. It can do everything from tracking prices to gathering lead data to extracting information about your competitors.

How does web scraping work?

Web scraping mimics what you do when browsing the web, just at machine speed and scale. Here’s the basic process (though what’s happening under the hood is a lot more complicated):

-

The scraper visits a web page.

-

It reads the HTML code (the underlying structure).

-

It finds the specific data you’re looking for (e.g., product prices, article titles, company sizes).

-

It extracts that data into a usable format for you.

Most modern tools go beyond this, too. Instead of relying on basic HTML, for example, they execute JavaScript to load dynamic content, take screenshots, fill out forms, click buttons, and even solve simple CAPTCHAs.

But here’s the challenge: not all websites roll out the welcome mat for scrapers. Many deploy anti-bot measures like rate limiting (controlling the rate of requests from external parties), IP blocking, and complex CAPTCHAs.

Web scraping examples: What can you actually do with web scraping?

Web scraping can be useful for personal and professional reasons. One of my favorite examples comes from Britney Muller in her Actionable AI for Marketers course. She set up a daily cron job—basically a scheduled task to run code—to scrape her local sub shop’s website so she’d get an alert whenever her favorite flavor was on the day’s menu.

But we’re not here to talk about a prosciutto and fig panino.

There are thousands of job boards built on top of web scraping. LinkedIn itself uses web scraping to help companies publish jobs from their career page to LinkedIn automatically. (Note that scraping content from LinkedIn itself is against their TOS.) Thousands of price comparison sites and real estate portals are also built this way.

And another really popular use case is using scraped data to enrich sales leads so you have more information to work with in your outreach. For example, you might use a tool like Apollo or Clay to get data on your leads and then have Zapier automatically send that data over to your CRM.

Or you might use web scraping to audit and gather details from your website or the competition’s—that’s the premise of using a tool like Screaming Frog.

Web scraping tools: How can you get started with web scraping?

It’s been a cat-and-mouse game between website security and website scrapers since they were created, so depending on what you’re trying to do, you might have to try several approaches to get the data you want.

Keep in mind that web scraping can get a bit technical, but there are solutions for every level of understanding, including beginners.

Let’s look at the options, starting with those beginner-friendly tools.

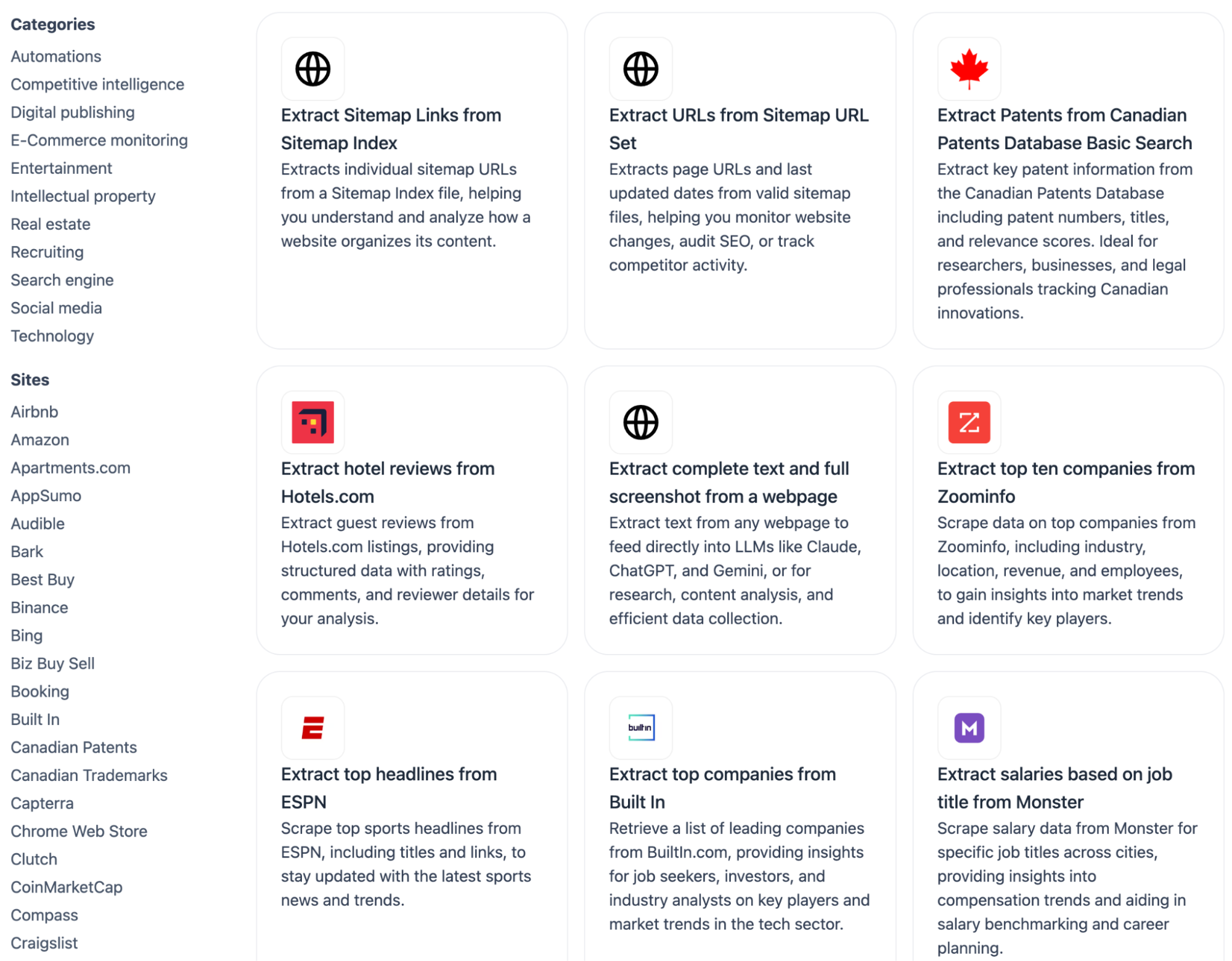

Browse AI and Zapier

I keep running across situations where I need to implement web scraping to get useful data into my vibe coding apps (and other workflows).

I usually start with point-and-click web scraping tools. Browse AI, for example, lets you “train” a robot by recording your actions on a webpage. You click through the data you want, and the tool creates an automated script that repeats those actions.

Of everything I’ve tried, I’ve found this to be the most beginner-friendly way to use scrapers to get the data you want. The learning curve is low, and it’s flexible in terms of setting it up to get the exact data you’re most interested in.

Browse AI has built-in functionality to bypass anti-bot measures with proxy management, automatic retries, and rate limiting.

You can use Browse AI for:

-

Monitoring competitor pricing (or other changes), such as setting up alerts when prices drop or when they launch new products

-

Lead generation, by scraping contact info from directories and job boards

-

Content research, like gathering article titles, publication dates, and author info

-

Compiling real estate data, such as property listings, prices, and descriptions

The best part is that they have pre-built robots, templates that can get you started quickly.

I also love that Browse AI integrates with tools like Google Sheets and Zapier, so you can take your data off-platform and add additional layers of usefulness.

For example, once Browse AI does its thing, Zapier can help you take action on Browse AI data by sending the results to all the other apps you use. Here are some examples of how it might work:

-

Scraping competitor pricing: Zapier sends Slack alerts when prices drop by 10%+.

-

Tracking product reviews: Zapier creates tasks in your project management tool for detected negative sentiment.

-

Gathering social mentions: Zapier updates your marketing dashboard and sends weekly summaries.

Here are a few pre-built templates to get you started, or you can learn more about how to automate web scraping with Browse AI and Zapier.

Zapier is the most connected AI orchestration platform—integrating with thousands of apps from partners like Google, Salesforce, and Microsoft. Use interfaces, data tables, and logic to build secure, automated, AI-powered systems for your business-critical workflows across your organization’s technology stack. Learn more.

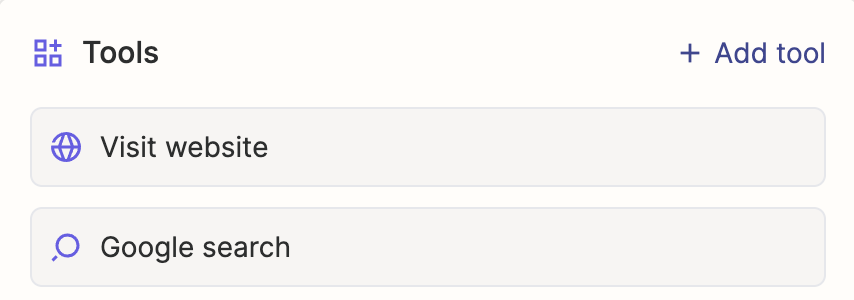

Zapier Agents

Zapier Agents is another great no-code solution that can do a lot of the heavy lifting for you. You can either connect it to Browse AI or use basic web scraping functionality with the Visit website tool. (You can see an example of how the latter option works with this news story categorizer agent.)

Zapier Agents lets you build automated workflows—with web scraping built in—using natural language, and you can orchestrate entire systems based on the data you extract. Learn more about Zapier Agents, or get started for free.

Data enrichment tools

Data enrichment is a really popular web scraping use case. If all you want to do is get more info on your leads, try a data enrichment tool like ZoomInfo, Apollo, or Clay. It’ll do the scraping for you, and when you connect it to Zapier, you can send that data wherever you need it. Here are some pre-made workflows to give you an idea of what’s possible.

Apify’s Web Scraper MCP

Apify’s Web Scraper MCP is another straightforward solution for getting started with web scraping. It uses JavaScript to extract structured data from web pages and provides some advanced functionality on top of built-in web browsing tools. And because it integrates with Claude Desktop, it’s a great one to use straight from your AI chatbot.

Python libraries

Now we’re moving into the more technical tools, where you’ll have to either know a little code or be comfortable using AI coding assistants.

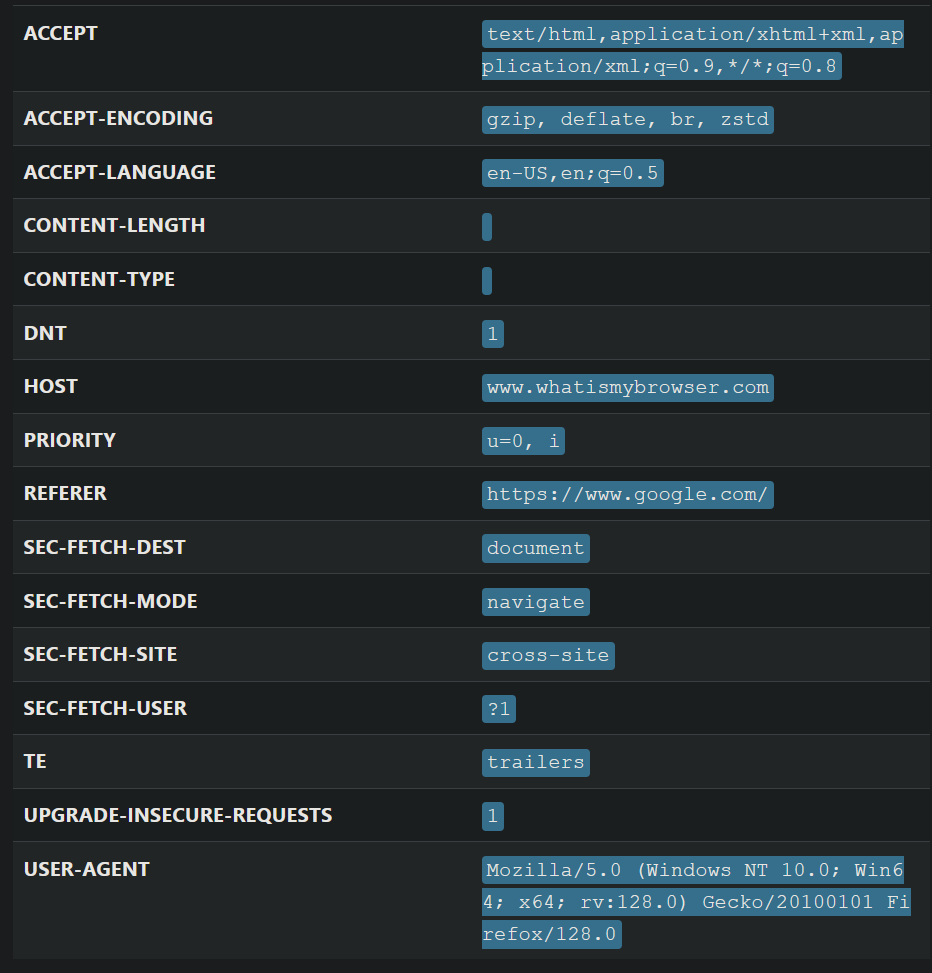

Using Python libraries, you can send HTTP requests to websites and parse the HTML response. Usually, this involves using the Python requests module to handle HTTP calls, which provides them with raw HTML. Then, you can use Python’s Beautiful Soup library (beautifulsoup4) to parse and make sense of that raw HTML.

(Yes, the Python library Beautiful Soup is named after the Alice in Wonderland song. It’s a nod to “tag soup,” which refers to poorly structured or messy HTML code. Python libraries are notorious for their whimsical names.)

Here’s the thing: basic requests won’t bypass bot blockers by default because they look obviously automated. Say you want to enter an invitation-only black tie event without an invitation. If you show up in swim trunks, you’re not going to make it past the bouncer. But show up in a tux, and you might blend in just enough.

That’s why you need to do things like adding headers, user agents, and proxy rotation. Headers and user agents are like dressing appropriately for the web—they help your bot blend in by appearing as a regular browser instead of standing out as automated software. And proxy rotation is akin to switching your digital appearance after a specific number of requests. After all, a human would only access a handful of requests. If your bot accesses 5,000 webpages in 10 minutes, you’ll stand out. With proxy rotation, you can lay low by switching the proxy after processing a limited number of webpages. You might also consider adding delays between your requests to be respectful (to not overload servers) and avoid rate limits.

For the best results with this approach, you’ll need to know how to navigate the CSS selectors on a page to zero in on the specific data you want the scraper to return. For instance, a career page might contain the job title in a CSS selector of jobTitle.

These are some of the oldest web scraping techniques. Beautiful Soup was introduced in 2004, so it’s not suitable for extracting data from more evolved websites that employ mechanisms to stop bots. But it can be effective for scraping news sites for headlines and article text, doing academic research (such as scraping the text in papers, citations, and author info), and scraping government/public data that’s posted as HTML tables.

Without knowing all the ins and outs of Python, I’ve successfully built simple Python scrapers with the help of ChatGPT and Google Colab.

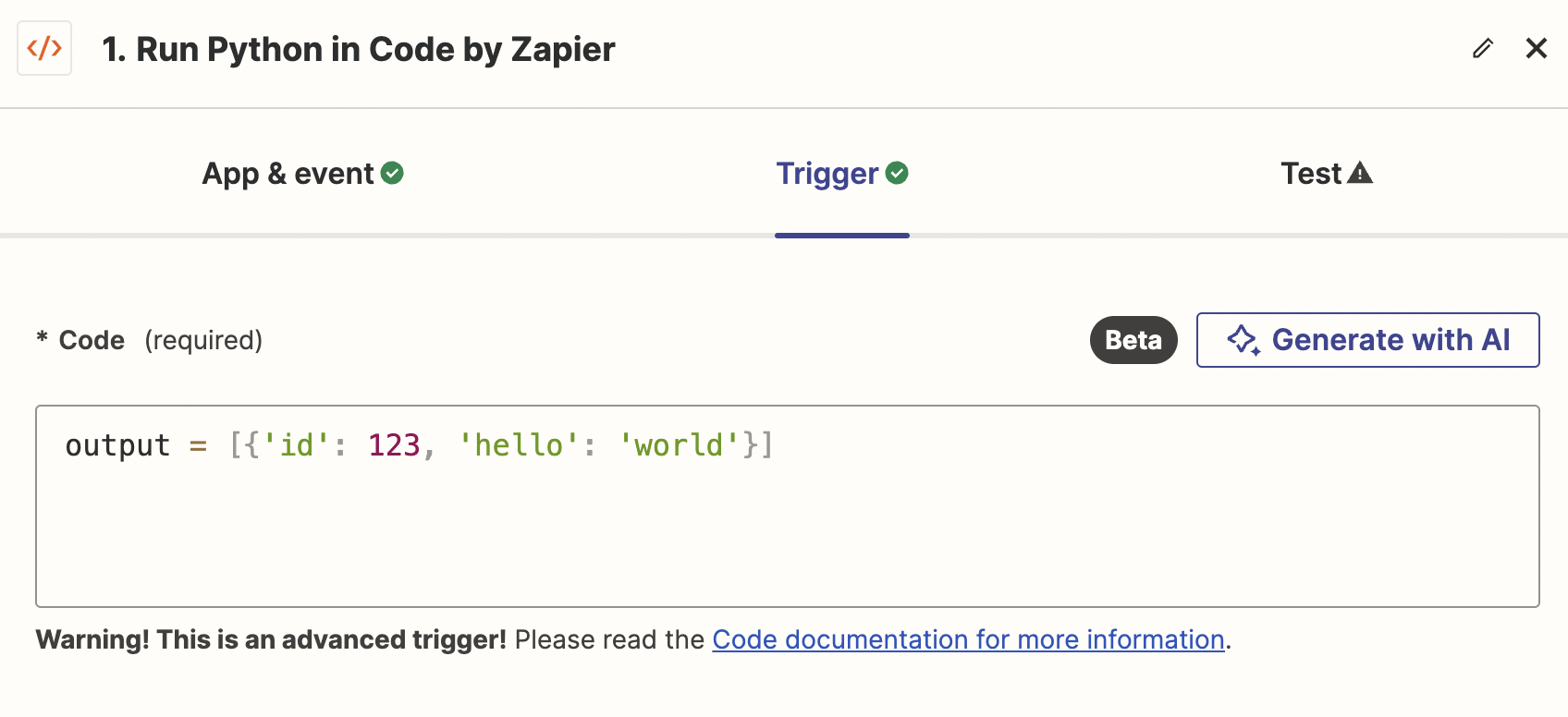

Python and Zapier

You can implement the power of Python within your Zapier automations, too, by adding a Code by Zapier action. Select the Run Python option, map the relevant data from your trigger step, then enter your Python code to parse HTML and extract the data. That way, you’re adding the web scraping into your existing workflows.

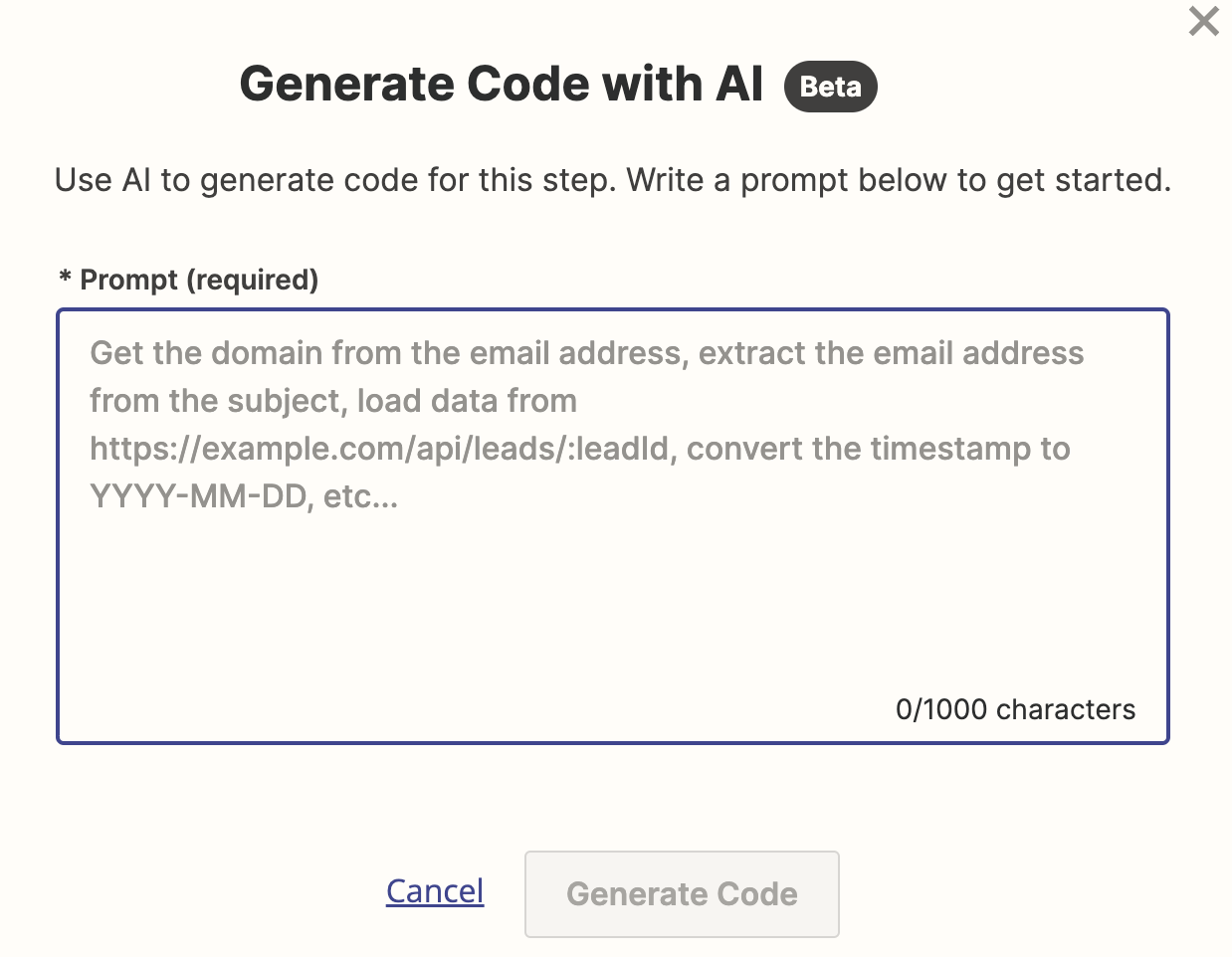

You can use Zapier’s Copilot AI assistant to help set up this step and/or write the Beautiful Soup Python code.

Advanced browser solutions

The greatest challenge with web scraping comes when simple solutions like Browse AI and even Python libraries don’t work—you’re still getting blocked.

In this case, you want a more advanced solution that can control a real browser like Chrome, Firefox, and Edge, programmatically. They can take actions like clicking buttons, taking screenshots, filling forms, scrolling pages, and waiting for JavaScript to load. Essentially, you’re using them to replicate human behavior.

They’re much better at handling blockers compared to basic scraping because they use real browsers to navigate websites. And you can layer in the functionality mentioned earlier: rotating user agents, handling cookies, and even simulating mobile devices to try to bypass difficult websites.

Here are the best options to consider in terms of Python and JavaScript libraries:

-

Playwright: available in Python, JavaScript, and .NET

-

Selenium: available in multiple languages, including Python and JavaScript

-

Puppeteer: available in JavaScript only

Selenium is one of the oldest options, first released in 2004, and it has plenty of documentation available to help customize it to your needs. But some call it a “beginner’s trap” because it’s a bit misleading: easy to start using at first blush but rife with unexpected challenges.

Playwright is the fastest and the most reliable option with built-in support for multiple browsers. I advise playing with it first and trying the others if it fails for your use case.

Advanced browser solutions are best suited for larger projects, where you can spend a good amount of time setting up the ideal scraper to ensure the return on investment is worth it.

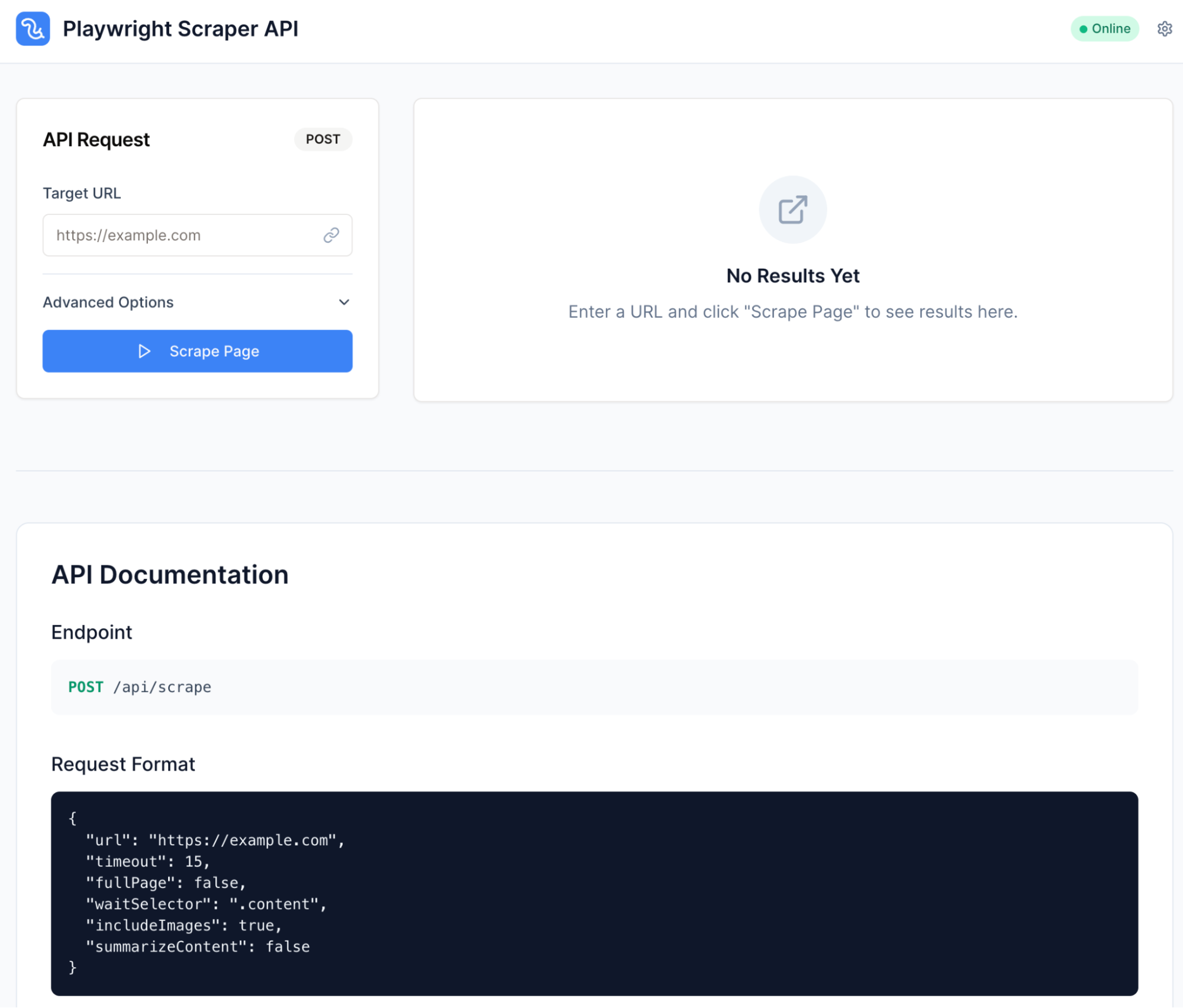

Using Playwright, I built an advanced scraper as a reusable tool for my vibe coding projects on Replit—I can use it as an API endpoint for other projects now.

Is web scraping legal? Web scraping ethics

The unfortunate answer: it depends. In some cases, like LinkedIn, it’s against a website’s terms of service. In other cases, web scraping could be copyright infringement; for example, you can’t just scrape The New Yorker and use its content on your website.

Then there’s the case of AI crawlers using web data to train large language models (LLMs) without explicit permission. There are legal battles happening over this as we speak. One emerging solution is publishers striking deals with AI crawlers to receive compensation for associated AI activity. Cloudflare recently launched technology that you can use to negotiate a partnership with AI crawlers seeking access to your website data.

But generally, when you’re using web scraping for things like competitor analysis, price comparison, and data enrichment, it will usually be considered acceptable under fair use principles.

In general, best practices for staying on the right side of the law and prioritizing ethical activities include sticking to public data, respecting robots.txt files, and not overloading servers with requests. And when in doubt, check the terms of service.

Turn scraped data into business actions

Scraped data alone isn’t enough. You still need a way to route, process, and act on it at scale. That’s where Zapier comes in, acting as a bridge between your scraped data and useful business workflows, so you can get your data where you need it, automatically.

Related reading: